Improved Mental Models

There is an evolution in the way we observe and perceive the world. In addition to our basic senses, we have advanced instruments to see, hear, detect, and measure phenomena, including virtual and augmented reality. We live in a complex web of cause-and-effect relationships with delayed feed-back loops making it difficult to understand the dynamics of the world around us, and our brains are naturally good at filling in the gaps to make sense of the unknown.

It is useful to view cause-and-effect relationships through a systems paradigm. A system is the set of interconnected variables of things to act and things to be acted upon that forms the path of least resistance leading to specific phenomena. Systems-thinking is a way of broadening one’s perception to see the hierarchy of structure, patterns, and feed-back loops, rather than isolated events.

What do you really know about the world around you? How accurate or how useful is your perception of reality? The human brain is easily fooled, it is limited and biased. Yet, it has been shown that an accurate perception helps to change, manage, and control outcomes. You can change the cause to get the desired effect, but bias, fallacy, and limited knowledge get in the way.

There are reported to be over 180 cognitive biases, and we all have them. Some well-known biases include the bandwagon effect, confirmation bias, and the gambler’s fallacy. Some less-known biases include the google effect, cryptomnesia, and the masked man fallacy.

EXAMPLES OF COGNITIVE BIAS

Stereotyping is to assume that someone or something has specific characteristics because of unrelated associations or similarities with a certain group. This is done with people, but it is also done with cause-and-effect relationships. If you know that good hand hygiene reduces the spread of infection, for example, then you might attribute all spread of infection to bad hand hygiene.

Courtesy Bias is to share opinions or conclusions that are framed more positively than they really are. This is done to be accepted, not offend, or to avoid controversy. This might seem like a good bias to have, but it supports the negative effects of groupthink, bullying, and other normalized deviations.

Reactive Devaluation is to devalue what is perceived to originate from an adversarial source. Many antagonists offer sincere suggestions or solutions that would even produce win-win opportunities but instead are devalued and rejected.

HOW TO IMPROVE YOUR PERCEPTION OF REALITY

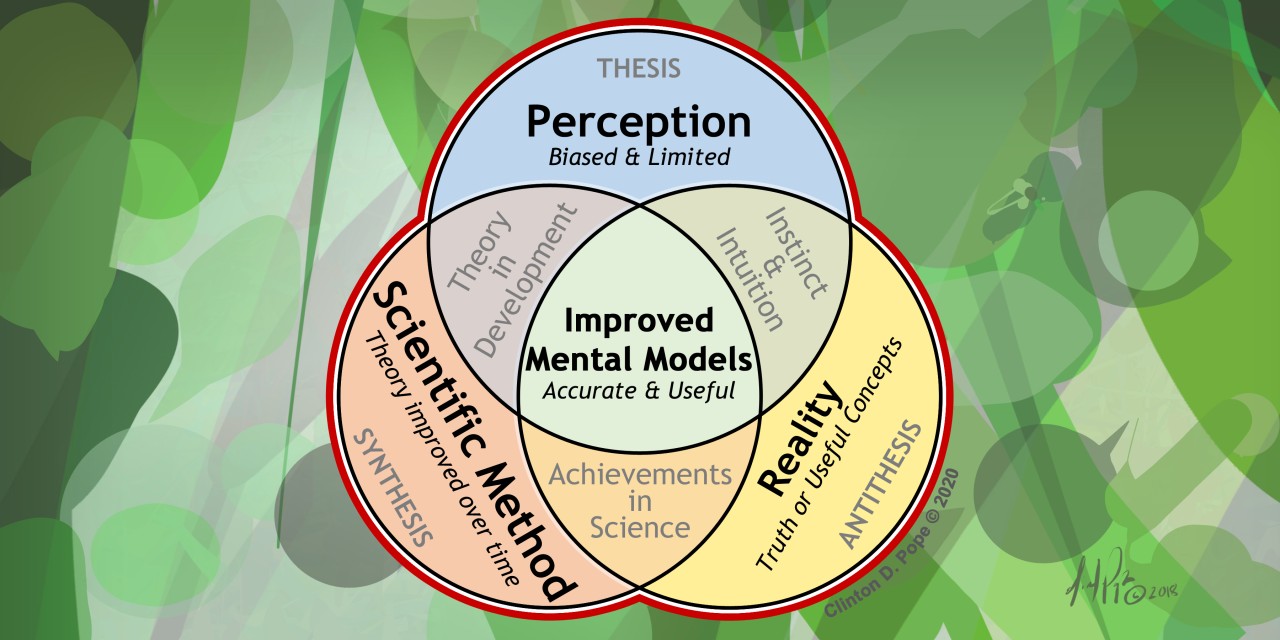

Perception is your mental model of reality. Perception is what you think is happening. The antithesis of perception is reality, truth, or the way things really are. The idea is to develop a system of improving the accuracy and usefulness of our perceptions while also identifying and removing bias. Here are six suggestions for improved mental models.

1. Acceptance

Knowing that you have bias is the first step to improve perception and avoid future bias. Humility is key. Actively look for evidence of bias and adjust your perception to improve understanding. Improvement requires active and willing change.

Everyone sees the world from a unique viewpoint. Accepting that the perceptions of others are also biased and limited can help us be patient, understanding, forgiving, and more open to dialogue. Rather than focus on others' bias and fallacy, recognize the usefulness of their unique perspective. Combining observations with the perspectives of others helps us see through the haze of bias and fallacy. Beyond that haze is a treasure trove of powerful principles that are applicable across many fields of study and diverse industries of practice.

2. Scientific Method

Knowledge is power! It is amazing what you can learn when you are free to observe under ideal conditions. Observation is most useful when it is measured, recorded, and tracked over time. Observations are the facts and an interpretation of the facts is called a hypothesis or a theory. Theory is only as good as the accuracy and clarity of its explanation and documentation.

Unfortunately, scientific theory is often treated as if it is irrefutable. Theories are meant to be debated and tested under varied conditions to see when and how they fail to properly interpret the facts. This is not done to disprove theories, but to improve them over time. Again, we combine observations and diverse perspectives to see through the haze of bias and fallacy to improve knowledge.

This is how any knowledge is gathered. Throughout your life, you have observed your surroundings, formed your perceptions, and tested those perceptions. This has led to perceptions that you can rely on and trust, which is why it is difficult to remain open to the possibility of bias or self-deception. Debate your perceptions in your own reflections and in dialogue with others, not to disprove them but to recognize how they can be improved and stripped of bias over time.

3. Just Culture

Just culture is about eliminating fear! When fear exists in an organization, people withhold useful data and information that could help improve the system. To eliminate fear, recognize that systems determine outcomes. Actions of individuals have consequences, but systems determine what those consequences are and individuals should not be held accountable for systemic failures.

When failure, mistakes, or errors occur, focus on the systemic root causes that delivered the negative result. It is inappropriate to leave high-risk or problem-prone processes to people, who are fallible and mistake-prone, and then blame the individual when mistakes occur. For critical-to-quality factors and for high-risk processes, design robust controls to mitigate failure. When failure does occur, console those who were involved, be kind, and help them observe systemic root causes without fear of reprimand or humiliation. However, when there is gross negligence or deliberate wrongdoing then individuals are held accountable for their actions.

Just culture is also about learning from failure. Encourage and reward examples of openness and transparency. Encourage a preoccupation with failure considering where things could lead and what could go wrong. Foster concern for the unexpected and prepare for emergencies, including how to bounce back with resiliency. Resiliency is not about repeatedly failing and bouncing back as if nothing happened. Resiliency is a healthy response to failure, where pause is taken to console, without blame or shame, followed by methods to recover with improved knowledge, improved ways of operating, and new ways to prevent failure.

4. Modeling

The famous quote from George Box states that, “all models are wrong, but some are useful.” In fact, some are extremely useful. Think of the mathematical models that allowed man to walk on the moon or static and dynamic models that ensure the structural integrity of skyscrapers and bridges. Yes, models are imperfect representations of reality, but try to operate any complex process or system without them.

As we observe the world around us, we formulate mental models or perceptions of reality. Mental models are more accurate when we reflect upon them in meditation, in journals, in writing and drawing, and in dialogue with others. The effort required to describe and teach models to others helps to think through models from different perspectives and allows for feedback.

Modeling is as simple as writing down thoughts or sketching simple diagrams but can be as complex as prototyping working models. The most useful models are those that create dialogue for a shared vision of true north aims and shared philosophies of how to navigate toward those aims. Common models include requirements documentation, standard operating procedures, vision and mission statements, process maps (SIPOC, flow diagrams, value stream maps), and blueprints.

5. Knowledge of Variation

Variation exists all around us. Every process and system experiences variation from one iteration to the next, from one operator to the next, from one inspection to the next. Ten people observing the same phenomena at the same time will each see, quantify, and describe it differently. What is the average size of a brown trout? Or what is the average human height and to what extent does that height vary from person to person? The two most used statistics for understanding variation are measures of central tendency and measures of statistical dispersion.

These statistics help describe the probability of various possible outcomes, known as the probability distribution. Measures of central tendency are mean and median, but also include dozens of other such statistics. Measures of statistical dispersion quantify dispersion from the central tendency and include range and standard deviation, among others.

Histogram 1 - With a standard deviation of 2.2 the count of occurrences is more spread across the range of outcomes (more dispersion)

Histogram 2 - With a standard deviation of 1.0 the count of occurrences is more grouped within the range of outcomes (less dispersion)

A basic histogram is the easiest way to visualize probability distribution. A histogram is a bar graph depicting the count of occurrences across possible outcomes. The bars will be tallest near the central tendency and comparing how spread out the bars are will provide a sense of statistical dispersion. However, incorrect data can lead to false interpretations, assumptions, and theories.

Correct data is critical to having a correct perception. Facts should be accurate, precise, and have clear operational definitions. Measurement systems analysis is used to understand the integrity of collected data by studying instruments, processes, and other factors that produce errors in measurements. An operational definition provides a description of what to measure and how to measure it. When accurate data are tracked over time, amazing insights are gained to manage and control systems.

This was discovered by Dr. Walter Shewhart while employed at Western Electric Company in the 1920s. He developed control charts to monitor performance over time. Rather than traditional quality control by inspection to remove defective parts, control charts provide a simple means to control the system, meaning that outcomes are predictable. By observing data over time, front-line workers can identify specific patterns, trends, and variations indicating that the system is out-of-control. When a system is determined to be out-of-control, it is economically favorable to expend resources removing assignable causes of variation to restore control. Control charts are one of the most powerful tools to understand variation improving perception and controlling systems.

6. Risk Management

Because of variation, things will go wrong. To have an accurate perception of possible pitfalls, risk management asks where things could lead and what could go wrong. Risks are possible events that could lead to unwanted outcomes, including bias, faulty information or assumptions, injury, delays, higher cost, etc. Risk management focuses on identification, assessment, design, and acceptance.

What are the limitations of your mental models? How reliable is your intuition and instinct? What are the actions and systemic components that lead to specific outcomes? Risk identification is the process of asking and answering these types of questions. This process is both objective and subjective including the study of past failures, failures experienced by others, and possible failure that theoretically could occur.

A common tool for assessing risk is a failure modes and effects analysis (FMEA) to break down a process or system considering what could go wrong at each step or with each component. The FMEA assesses specific failures to include the multiple effects of each failure. Those effects are further assessed and scored for probability, severity, and detectability of occurrence. The goal of an FMEA is to manage and document risk through the phases of identification, assessment, and mitigation controls.

Mitigation controls are implemented in the design of the system and will either eliminate the risk or reduce the probability, severity, or detectability of occurrence. The design of the system is not referring to the design of the model or the plans, but the physical and metaphysical variables of the actual system. When changing the design of the system it is important to consider the upstream and downstream effects of the change. The goal is to build and improve a robust system that can be controlled to safely deliver high quality desired outcomes.

And finally, risk acceptance is a process to decide what risks are acceptable. Every day we place ourselves at risk when we drive or fly, when we play sports, and when we interpret observations to form a perception of reality. It is not feasible to wait for a complete understanding of all risk before acting, so we accept risk in favor of progress.

IMPROVING PERCEPTION IS AN ITERATIVE PROCESS

The world is astonishingly complex with faulty information readily available through social media, opinionated news, and internet galore. Cognitive bias and limited knowledge are at odds with our desire to have perceptions that are accurate, useful, and true to reality.

There is no magical shortcut to eliminating bias and fallacy from our perceptions. It is a process of continuous improvement. These six suggestions are offered as syntheses to bridge the gap between perception and reality. The scientific method is the structure of virtually all improvement methods. It is meant to be done iteratively to improve theory over time, getting closer and closer to reality.

The claim is that perceptions are always limited and biased in some way when juxtaposed with reality. Bias and fallacy exist in both perception and in scientific theory. At the same time, despite bias and fallacy, we advance with amazing ingenuity to create useful solutions in science, engineering, technology, and medicine. Use these six suggestions as a navigational method to gain an accurate perception of your own bias while also orienting and moving closer to truth, which will prove to be useful in your own pursuit of ingenuity.

_______________________________

About the author

Clinton D. Pope is an industrial engineer, an artist, and most proud of his role as husband and father. He has spent his career in health care and has a passion for improving and designing sustainable health systems to deliver better outcomes for patients and for health care workers. He can be reached at improve@clintpope.com or LinkedIn.com/in/ClintPope

Using Systems Thinking to deliver management consulting, soft skills training, facilitation and engagement, leadership coaching, development mentoring and NHS informatics transformation. Will respond to all pronouns.

3yInteresting perspective. One question; by what measure are perceptions "improved"? I can understand broadened but there seems to be another order implied so one can "improve". Even the assumption that bias and fallacy are lower order perceptions makes the implication that one's perceptions can be right - a concept dispelled 2,500 years ago and never overcome by the scientific method. Perceptions are all equally valid, (known falsehoods are only disguised as perceptions) and bias is intrinsic to thought. One is inclined then to lean towards the utility of that perception, but even then there are conflicting assumptions of the ranking of utility. Perhaps the only way to improve perception is to remove it? Where one cannot. we must embrace the circularity of self-awareness and build tolerances accordingly. Notwithstanding this, some really interesting tools are explored here, Thanks for sharing

GM/Strategic Change Consulting Practice Lead at The Advantage Group, Inc.

3yClinton D. Pope. Happy 2021 Excellent article. Keep in mind that Scientific method does not mean analytic Thank you for sharing

Dismantling white supremacy from the inside out

3yBeautiful group of insights, Clinton. I agree that "There is no magical shortcut to eliminating bias and fallacy from our perceptions." However, there is a shortcut -- let's spread it around: https://jillnagle.medium.com/the-meta-fallacy-ace-this-one-and-youll-never-be-confused-again-3b80406fbae6

Retired Quality System Expert

3yI enjoy the article too Clinton. You’re meticulous & delimiting at the same time. One observation on the content of the article I may have is how perception faces reality, but also the degree to which it may be subject to change in interaction with the influence groups, e.g. gurus, peers, & others, thus trained or modified to face reality. Also, what we consider reality may not always result in the desired synthesis of the type we know as scientific theory, e.g. when belief is viewed as reality. I do however understand that we’re focussing on knowledge here!

Editor &Publisher DATABASE DEBUNKINGS, Data and Relational Fundamentalist,Consultant, Analyst, Author, Educator, Speaker

3yModels models everywhere nor any time to think